Booking Wonder Woman tickets with a twist

I recently moved to Hyderabad. With a slew of software companies and eating joints around the block, it's a pretty cool place to live in.

One of the things that you immediately notice on moving here (or atleast I did) is the mad rage for movies. I'm talking jam packed houses for even low-key movies. I recently got a glimpse of this mania when I had to postpone watching Guardians of the Galaxy Vol 2 by a few days because I didn't book the tickets WELL in advance.

With Wonder Woman coming out on June 2nd, I've decided to try to get those sweet right-in-the-middle-farthest-from-the-screen-seats in Prasads. So the idea is to get notified as soon as the booking option is available on BookMyShow.

Table of Contents

Let's solve this problem!

Here's the plan:

- Scrape the BookMyShow webpage for show listings. - If Wonder Woman found, send an SMS through twilio. - Schedule this using cron on an application running on Google App Engine.

easy-peasy, right?

Scraping

import requests from bs4 import BeautifulSoup def scrape(url): r = requests.get(url) html = r.text soup = BeautifulSoup(html, 'html.parser') tags = soup.find_all(class_="__name")\ for el in tags: try: movie_name = el.contents[1].contents[0].lower()

This is the simple function that scrapes the page and filters specific tags using requests and BeautifulSoup. You might wanna look into the documentation of BeautifulSoup for the nitty-gritty details of searching the DOM tree.

So now we have the names of the movies playing on a certain date.

Next, sending the messages using twilio's API

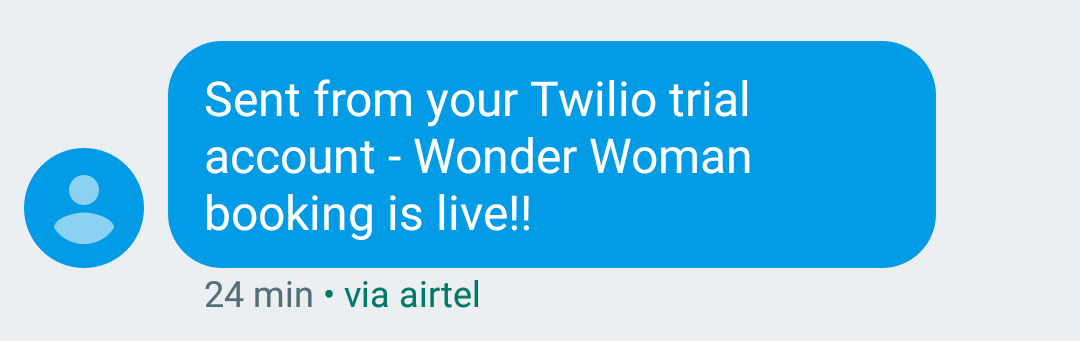

Twilio makes it very easy to use their API. All you need is an account SID and an auth token. They even give you a phone number and some credits on signing up. Sweet!

from twilio.rest import Client client = Client(account_sid, auth_token) def send_message(text): message = client.messages.create( to=number_to, from_=number_from, body=text) print(message.sid)

Now comes the somewhat tricky part.

Automating all of this on an application running on Google Cloud Platform

GCP's site has plenty of documentation regarding the initial setup. Check it out here.

This is the short version of all the stuff that needs to be done: Create a new project. Download and install the Cloud SDK. * Clone a Hello World sample app.

The SDK also comes with a local development server to test the application.

Just for context, here's how the app engine directory finally looks like:

base_dir ├── lib(contains the libraries) ├── CONTRIB.md ├── LICENCE ├── README.md ├── app.yaml ├── appengine_config.py ├── cron.yaml ├── main.py ├── requirements.txt ├── scrapeBMS.py ├── send_message.py ├── source-context.json ├── source-contexts.json ├── twilio_credentials.py ├── vendor.py

The requirements.txt file looks like this:

Flask==0.10 twilio beautifulsoup4 requests==2.3.0

Google has pretty good documention on using python libraries on the App Engine here.

Keeping things simple, I've chosen Flask as the server.

The two URLs I'm concerned with are:

https://in.bookmyshow.com/hyderabad/movies/english https://in.bookmyshow.com/buytickets/prasads-large-screen/20170525

The first one is the english movies listing page and the second one is specific to Prasads. I need to check the listings for the next few days from the release day. Just to be safe I'll choose 2 days.

from flask import Flask app = Flask(__name__) app.config['DEBUG'] = True import datetime from scrapeBMS import scrape1, scrape2 base_url_big_screen = "https://in.bookmyshow.com/buytickets/prasads-large-screen/cinema-hyd-PRHY-MT/" all_english_url = "https://in.bookmyshow.com/hyderabad/movies/english" CHECK_FOR_DAYS = 2 word_list = ["wonder woman", "wonder", "woman"] release_date = datetime.datetime(2017,6,2) def get_time_stamp_value(increment=0): date = release_date if increment!=0: date+=datetime.timedelta(days=increment) month = "%02d" % date.month day = "%02d" % date.day timestamp_val = str(date.year) + month + day return timestamp_val #check if message is already sent or not, to prevent multiple messages being sent #GET_STATUS url will simply return 0 or 1 depending on whether notification #is sent or not def get_status(): r = requests.get(GET_STATUS) print r.text bms_sent = r.text return bms_sent @app.route('/tasks/check-for-WW') def cron(): bms_sent = get_status() if bms_sent == "0": print "message not yet sent" #check for all english movies listing scrape2(all_english_url, word_list) bms_sent = get_status() #check for the next few days at Prasads Big Screen if bms_sent == "0": for i in range(CHECK_FOR_DAYS): date = get_time_stamp_value(i) url = base_url_big_screen+date # print url found = scrape1(url, word_list) if found == True: break return '200 OK',200

There are two versions of scrapers to scrape the two URLs mentioned above. They just differ in the way they search the DOM tree.

def scrape1(url, word_list): r = requests.get(url) # resp = urllib2.urlopen(url) # html = resp.read() html = r.text soup = BeautifulSoup(html, 'html.parser') tags = soup.find_all(class_="__name") # ww = False for el in tags: try: movie_name = el.contents[1].contents[0].lower() found = check_for_keyword(movie_name, word_list) if found == True: send_notifications() return True except Exception: pass return False

def scrape2(url, word_list): r = requests.get(url) soup = BeautifulSoup(r.text, 'html.parser') tags = soup.find_all("div",class_="wow fadeIn movie-card-container") for el in tags: try: movie_name = el.contents[1].contents[1].contents[1].contents[1]['alt'].lower() found = check_for_keyword(movie_name, word_list) if found == True: send_notifications() break except Exception: pass

Both of these call the following function which does a simple comparison and calls the twilio subroutine on a match:

def check_for_keyword(keyword, word_list): print keyword for word in word_list: if word in keyword: #BMS booking live! return True return False

And send_notifications sends the notifications:

def send_notifications(): message_sent = False #try sending success message try: send_message(MESSAGE) message_sent = True except Exception as e: print str(e) if message_sent == True: #try updating bms_sent try: update_bms_sent() except Exception as e: print str(e)

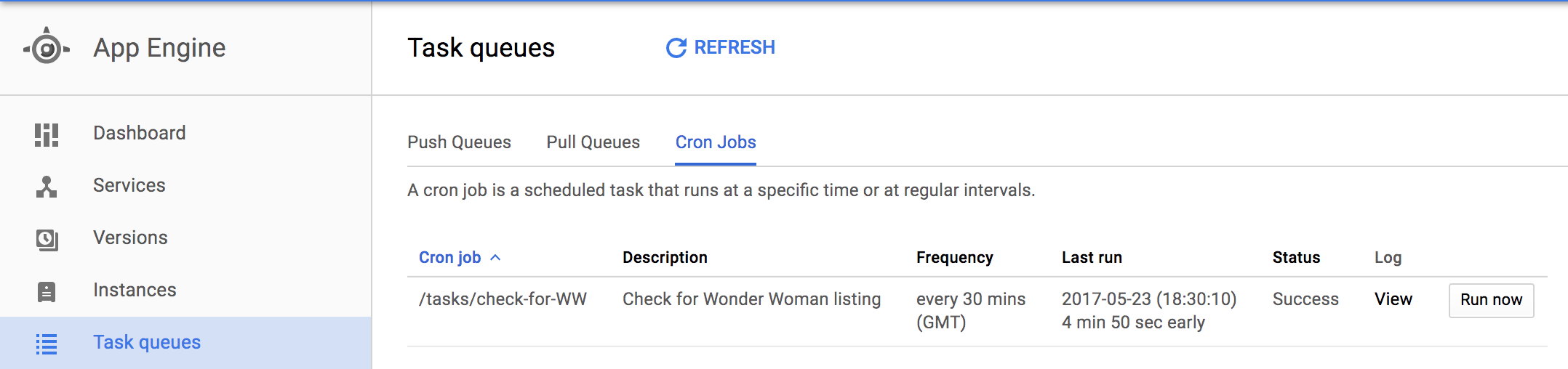

Google App Engine has a pretty cool implementation of cron which is geared towards cron usage for web applications. Simple put, a cron job on App Engine invokes a URL, using an HTTP GET request, which can then be handled by the application. In our case, the cron job will invoke /tasks/check-for-WW.

This is is written in cron.yaml file as:

cron: - description: Check for Wonder Woman listing url: /tasks/check-for-WW schedule: every 30 mins

So once this is done the app can be deployed using:

gcloud app deploy

The cron configuration can be deployed using:

gcloud app deploy cron.yaml

So the cron job will run every 30 minutes checking for the Wonder Woman listing on the BMS page. All of this can be viewed in the UI on console.cloud.google.com as shown below:

This is how the SMS should look if everything works out:

That's it! Let's hope the movie turns out to be good.

Update: Finally saw it on opening day! It is every bit as glorious as I expected it to be.